As enterprise leaders in healthcare and the life sciences are keenly aware, Epic has made huge waves in recent months with the announcement that they will be transforming the architecture of Cogito, their analytics suite, by moving it to Microsoft Azure over a period of one to two years.

The opportunities and obstacles created by that tectonic shift, which we talked about at length in this article, will doubtlessly continue to evolve as details about that migration emerge. One thing, however, seems certain: this move will be an important consideration for healthcare and life science data strategies well into the foreseeable future.

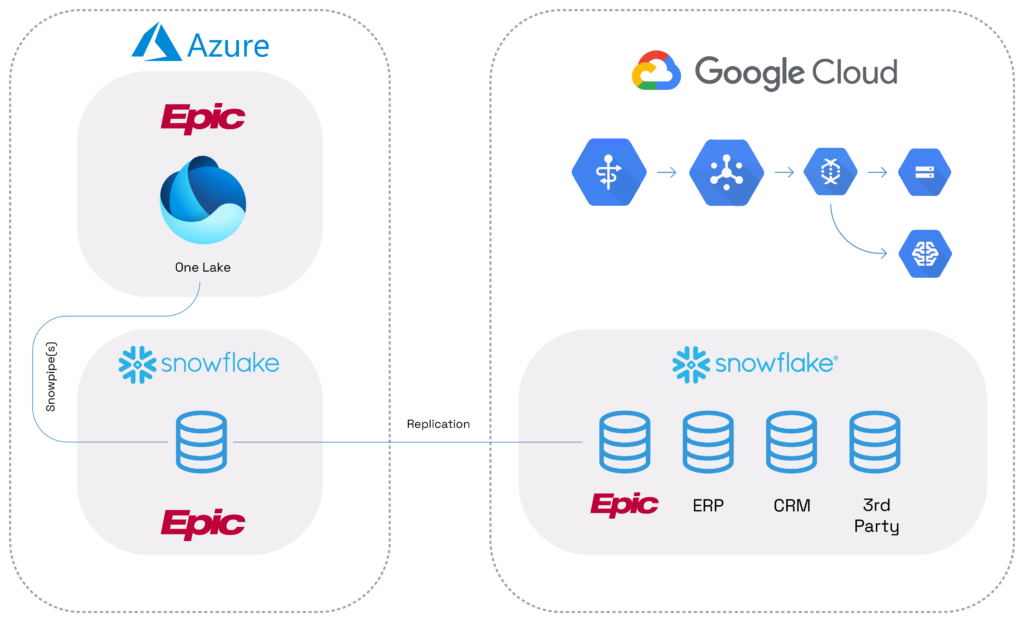

In this article, we will discuss what Cogito’s move to Azure means specifically for health systems that have placed large bets on Google Cloud Platform (GCP), with a focus on how Snowflake can help Epic users build and deploy effective multi-cloud data strategies.

Google Cloud Platform and the Multi-Cloud Conundrum

In recent years, Google has made heavy investments in the healthcare sector. In their words, “Google Cloud’s goal for healthcare is very much a reflection of Google’s overall mission: to organize the world’s information and make it universally accessible and useful.”

This investment has led to the creation of tools built specifically to address interoperability challenges in healthcare data, including Google’s Cloud Healthcare API, which is currently available to a small stable of partners. Their customers, meanwhile, include some of the largest and most innovative health systems in the world–some of whom are also customers of Epic Systems.

So what happens to this subset of health systems when their largest and most critical data source is moved to Microsoft Azure?

The Case for Multi-Cloud Strategies

It’s not surprising that one of the best long-term strategies for health systems in situations like the one above, in which vital data is stored in two different clouds, is for them to go “multi-cloud.” Multi-cloud strategies offer a myriad of benefits, which include:

- Preventing Vendor Lock-In: By incorporating more than one cloud data provider into their data strategies, healthcare and life science systems can sidestep vendor lock-in and keep themselves open to best-of-breed services and applications as the modern data stack continues to evolve and new needs emerge within their organizations.

- A Wider Distribution of Regional Data Centers: Multi-cloud strategies can also help organizations navigate the challenges presented by geo-residency requirements by capitalizing on each cloud provider’s map of regional data centers to achieve optimal presence, capacity, and service for teams operating in different locations.

- Improved Uptime and Business Continuity: Finally, multi-cloud strategies can help organizations improve the continuity of their business, maximizing uptime and providing contingency plans for outages on behalf of any one cloud provider.

- Faster and Easier Synergies During System Consolidations: For an industry where mergers and acquisitions occur frequently, multi-cloud strategies are uniquely positioned to accommodate structural changes while making consolidations smoother and more cost effective.

It is worth noting that the perks of multi-cloud strategies are by no means exclusive to Epic, Azure, or GCP users; the case above could just as easily apply to a healthcare system leveraging AWS and Epic, for instance. For the sake of illustration in this particular article, however, we will focus on a GCP scenario.

Barriers to Operationalizing Multi-Cloud Strategies

The downside to data strategies that leverage more than one cloud data partner, however, is that they are commensurately complex to implement and maintain. All of the major cloud platform providers, including AWS, GCP, and Azure, have proprietary (and therefore incompatible) APIs for managing data, making it difficult for organizations to copy or share data from cloud to cloud and resulting in data silos that disrupt interoperability across the organization.

What’s more, because of the nearly impossible task of finding and retaining developers with the skill sets to work in multiple clouds at once, organizations often end up with dedicated teams for each of the clouds they are working in, further siloing data and causing enterprise-wide disruptions to data sharing.

To provide a bit more industry-wide context, most large health systems are still in the early-to-mid stages of their data innovation journeys from data chaos (i.e. siloed, on-premise, legacy data solutions requiring a great deal of manual intervention) to order (i.e. cloud-based, interoperable systems), and from order to insight. This means they are just beginning to grow comfortable with single-cloud strategies and are simply not equipped to handle the recruitment, retention, and overall IT upskilling required to maintain multiple cloud services at once. Many of these systems also operate regionally and must take geographically-determined latency into account when selecting a cloud service.

What Are the Options for Epic Customers with Other Cloud Investments?

In lieu of Snowflake, one possible solution to the multi-cloud conundrum would be for an organization to make use of Google’s Storage Transfer Service to move data from one Cloud Storage location (Azure) to another (whether GCP, AWS, or another provider). Storage Transfer Service has the benefit of being a no-code option, but comes with its own cost and security challenges.

Another possible solution for Epic customers, of course, is to simply move all of their data into Epic’s Cogito Databases (i.e. Microsoft Fabric). Historically, this kind of migration brought cost and scalability challenges to organizations still using on-premise Epic Cogito databases. Those considerations are largely done away with in the cloud, but, as highlighted above, moving everything into Azure would now amount to a vendor lock-in, requiring healthcare systems to give up their investments in other cloud solutions and any unique features they may bring to the table.

Regarding expense, Storage Transfer Service includes a network egress charge as well as a charge per cloud storage operation. These costs can add up quickly, especially when considering the size of some data domains within Epic.

Security, meanwhile, brings its own slew of challenges. Epic implementations typically come with extensive, role-based access control, and it remains to be seen how Epic will implement this security as they move Cogito to Azure. Regardless, the move from Azure to GCP introduces additional complexity and could require the implementation of this role-based access in multiple places to make this solution work.

For the many healthcare systems that are already deeply invested in both Epic and GCP, Cogito’s move to Azure means that they have arrived at something of an impasse.

Making Cross-Cloud and Multi-Cloud Strategies Possible with Snowflake

Fortunately for these joint customers, Snowflake’s ability to replicate and share data across both clouds and regions with Cross-Cloud Snowgrid offers another path to multi-cloud strategy–and, better yet, this solution is a relatively simple one with minimal code and low maintenance requirements.

This cross-cloud solution also means that customers can keep what they like from Epic and GCP while taking advantage of the lengthy catalog of additional features Snowflake offers.

This cross-cloud interoperability could also be leveraged to build true multi-cloud solutions in the long term, allowing organizations to leverage multiple clouds in concert. A unified data management platform, such as Snowflake, allows data to be shared, replicated, and

accessed regardless of where it is stored, effectively bridging cloud silos and portability issues that otherwise make cross-cloud operations untenable.

Seamless replication across clouds with Snowflake allows organizations to maximize the Epic Azure offering while taking advantage of best of breed services within existing investments.

Tapping into Cross-Cloud and Multi-Cloud Strategies with Snowflake and Hakkōda

Hakkoda’s data teams bring expertise from across the modern data stack to help our clients build robust, future-proof data solutions that leverage Snowflake’s capacity to quickly, securely, and reliably share data across multiple clouds and regions. We are versed in the particular complexities and impediments surrounding healthcare and life science data, and offer highly flexible solutions that can bring the best of Epic, GCP, AWS, and other public cloud solutions together with best-in-breed tools from across the modern data stack to solve your organization’s most challenging data problems.

Ready to learn more about how Snowflake can help your organization lead the charge for healthcare data innovation with cross-cloud or multi-cloud data strategies? Let’s talk.